|

My new essay on Muslim Presidents is up at Medium.

I'm happy to announce Heterodox Academy.

We are a group of scientists and scholars, mostly in the social sciences, who have come together to advocate more intellectual diversity in academia. We include Jonathan Haidt, Steven Pinker, Charlotta Stern, Dan Klein, Jarret Crawford, Lee Jussim, Scott Lilienfeld, April Kelly-Woessner, Gerard Alexander, Judith Curry, and many others. We launched the website roughly in concert with my and colleagues new paper in Behavioral and Brain Sciences (older preprint here for those without journal access.) In recent decades, our academic institutions have become strikingly ideological. It would be one thing if we saw competing ideologies battling each other in a war of ideas – it would be disappointing that scholars were sorted into ideologies, rather than looser commitments, but at least different systems and ideas would be in play. The problem in our era is not just that academics are excessively ideological, but that almost all of them subscribe to the same ideology. This place, this time, is but one place, one time, in the broad sweep. We're part of a story, an enterprise, that began thousands of years ago, and will continue for thousands of years after we're dust. It would be foolish of us to assume that whatever ideology or philosophy that happened to be popular when we came of age was the last word, or even particularly impressive. It would be lazy and incurious of us to assume that the political landscape of our day should bind us, or should give us the answers to our questions. I think it's time for scholars to realize that the nostrums of the early 21st-century academic left, the cobwebs of Marxism, and the various iterations of structuralism are all well and good, but there is much more between heaven and earth than all of that. Thus, Heterodox Academy. This isn't particularly about the heavy discrimination against conservatives in academia, though we would certainly benefit from having far more of them in the academy, and certainly in social psychology. We have little hope of achieving a reliability valid social science when its researchers are so invariant and narrow in their culture and politics, as social psychologists are. This is about bringing in more non-leftists, an unbounded and multidimensional category that extends far beyond one side of a left-right line. It would be a mistake to assume that the only challenge the left faces is from the right. This is about bringing in more moderates, libertarians, idiosyncrats, and perhaps most precious of all, non-political scholars and scientists. Social psychology in particular would benefit from more researchers who do not derive so much of their identities from their (uniform) political ideology. Leftist activists are using social psychology as a vehicle to wage larger, crass political campaigns. I would not want their opposite numbers on the right to flood into the field – I'd want an influx of sober scientists. I'm optimistic. I recently heard a story that reminded me of how much graduate students can suffer.

Some graduate students out there suffer enormously and needlessly because of unprofessional and untrained mentors. To be fair, scientists in academia wear lots of hats. They typically have to do all these things:

Unfortunately, faculty tend not to have any training in management, leadership, or mentorship. In the private and public sectors, managers and leaders are trained, and in the best organizations their training never ends – they might have new training every year, perhaps a week-long seminar focused on a different aspect of management or leadership. Just as importantly, managers are often selected for their management ability to begin with. Some faculty are naturally good at mentorship, and many are passable, but this is mostly due to luck and the sheer decency of these people. Since academic culture doesn't train people in mentorship, and doesn't reward good mentors or police bad ones, we'd expect, as social scientists, to see a lot of bad mentorship – and we do. At its worst, it's absolutely heartbreaking to watch. I've never seen suffering like grad student suffering – not anywhere I've been, any job I've had, any organization I've experienced, not even the Navy. I've never seen so many people in therapy, or so many people on anti-depressants. I've seen people ground into nothing, having been convinced that they were worthless and hopeless, quitting their programs two years in. The emotional beating some people take from their mentors and programs is not functional. There is zero evidence that it works. Much of what we see in academia is unnecessary and unscientific torture. Much of it is simply a function of psychologically imbalanced and unprofessional faculty. Yes, many of us suffered greatly in graduate school and emerged from it fine. Perhaps we can trace episodes of professional growth to times we suffered, times when they told us we weren't good enough or our work wasn't good enough. That does nothing to empirically bolster anything I'm talking about. We can tell people that their paper sucks or their idea sucks without telling them that they suck, or that they'll never make it. It's not clear why anyone would think that the global, essentialist you suck judgment is functional, or does any mentorship work. I wish I could bring some of these people back, people who left their programs and advisors in a trail of tears. I wish I could mentor them myself, show them their strengths, flood them with optimism and support, and build, step by step, week by week. Maybe some of them would end up being titans in the field – I wouldn't rule out the possibility. (I just finished graduate school and am on the faculty job market, so here I'm taking liberties and fast-forwarding to a Joe-as-faculty universe. I used to manage teams of software professionals before grad school/social psychology, so I'm fairly confident in my mentorship skills.) If you're in graduate school now, if you're suffering badly and need someone to talk to, I'm more than happy to talk, to give advice, to listen. If you're reading this, you're probably in social psychology or related fields, but your field doesn't really matter. Maybe a therapist would be better than a Mexican, but I'm not too sure – the evidence for therapy is mixed and exquisitely complicated, and therapists and Mexicans are not mutually exclusive anyway. In any case, I'm here, I'm a good listener, and I care. There's too much needless suffering out there, and if I can help one person, or even half a person, this post will have earned its keep. Hit me up. Now, I know some grad students who never use their phones for talking, and even find it awkward to talk to someone on the phone. I'm open to all mediums, but if you haven't experienced long, unhurried conversations with other humans, I highly recommend it. [email protected] My empirical work has mostly focused on the emotion of envy. I have a lot of data that I need to publish. I've been frozen lately by a sort of methodological crisis and metamorphosis. I started thinking about a number of validity issues after working on the BBS paper, and I couldn't move forward with some of my research until I sorted out whether it was valid or useful. (I'm as hard on my own methods as I am on everyone else's.) I'm trying to integrate much of it now, and I thought I'd share some interesting things I've learned in trying to uncover some causes and predictors of envy.

Eliciting Envy So far recalled experience has been much more useful than attempts to elicit envy with canned inductions. A recalled experience is what it sounds like – ask the participant to recall a time when they experienced envy toward someone and have them write briefly about it. To elicit a new envy experience during a study, I've had people read various narrative passages or articles. I stopped using student samples a few years ago, but I have lots of student data from before, and with ASU students I had crafted fake student newspaper articles about a particularly affluent student (gender matched), for example. More recently in looking at envy and anti-Semitism, I've used passages that report some of the ways in which Jews excel, from higher mean IQ scores, to household incomes and Nobel Prize take rates. The basic structure with these inductions is that you have the participant read the article and then you're looking for envy downstream, by direct measures (e.g. "How envious do feel right now?" sandwiched within other emotion items like anger, happiness, etc.) or oblique measures (mostly the kinds of things discussed by van de Ven et al.) I've never seen a lot of envy with the canned inductions. Maybe 10 to 20 percent of the sample will report any level of envy in that context. However, with the recalled experience method, most participants will have something to say about a past envy episode. This method requires trained coders to extract whatever dimensions you're interested in (or you could automate it with text analysis software), but it gives you much richer, and I think, more valid, information. In fact, I wouldn't assume that "envy" is commensurable across these methods. Meaning, that what we're calling envy in one method may not be the same thing that we're measuring in the other. The people who report envy after reading about an ASU student, or Jews, might not be the same people, in a sense, as those reporting vivid envy episodes in a recall exercise. That is, the emotional experience might be substantially different, and the personality predictors different as well. One of the most important lessons Lani Shiota taught me is the benefit – and sometimes the absolute necessity – of measuring emotions without referring to those emotions by name. ASU gives you excellent evolutionary psychology training. Well, I criticize the evo psych framework sometimes, but it makes us better social psychologists than we would be without it – even if we don't fully agree with the framework or some of the hypotheses generated by it. The evo psych perspective on emotions looks at their function, or their fitness value in the ancestral environment, and so when we want to carve nature at its joints, we don't want too many modern words and abstractions in the way. Now, I actually used the term envy in asking people to recall an episode. I didn't say "Think of a time when someone else had more than you did, or had something you badly wanted, and it made you feel bad." Rather, I asked explicitly for an envy experience. However, the open-ended nature of the recall method gets you much closer to carving nature at its joints. You see a broad range of elicitors and situations. You see the role of gender in ways that would be hard to pick up with canned inductions. You're not as restrained by your priors. (Yes, there's a potential trade-off with effect hunting, cherry picking, multiple comparisons, etc, but I think those can be managed. Exploratory research is extremely promising and should be more common.) Sibling Rivalry I never thought about this, predicted it, or had any hypotheses about it. It simply never occurred to me. I'm the oldest of three, and the only boy. We're evenly spaced about six years apart. I have no experience with sibling rivalry – I've never been in competition with my (much younger) sisters, and have never resented anything they've done or achieved. I'm a low or zero envy person in general – perhaps another reason to prefer the recall method and be open-minded. Sibling rivalry is real. I was struck by some of the participants' accounts. The most striking pattern is that some women really hate their sisters. It was heartbreaking to read – there's real pain out there, people suffering greatly from the achievements or life status of their siblings (especially their sisters.) One question in the recall exercise asked people what they wished had happened to the person back then, or what they wished they could have done to the person. One woman reported that she wanted to "beat her bloody", referring to her sister. The circumstance that evoked the envy was that her sister had a "good husband" and a nice house. Her sister hadn't actually done anything to her as far she reported – just having a good husband and being affluent (perhaps because of her husband.) Well, that might be biased on my part. She might maintain that her sister had done something to her – it just may not look like a thing that was done to her to a man like me. This probably highlights some of the pressures and expectations women in our culture place on themselves and/or we place on them. Some women are defining themselves through the men they marry. Perhaps that's too strong – maybe it's more that finding a good husband is a major box checked for some women, and perhaps more time-critical, than the converse for men. I think this would be much less true with academic women, but I suspect it's more intuitively accessible to them than it is to men. Women know women. This is actually a good example of the value of multidimensional diversity in any social science or branch of research psychology. We'll be limited in our ability to understand major swaths of human motivation if we don't experience them ourselves or come from the requisite culture. The BBS paper focuses on political diversity, but I increasingly think other dimensions are just as important – we need a lot more black people, Latinos, Natives, people from rural communities, people who have served in the military, people from non-Western and non-affluent cultures, and probably more masculine men. (I don't think "men" and "women" are necessarily sufficient categories. Semi-relatedly, GLBT are not underrepresented in social psychology – they're overrepresented (the percentage in our field is greater than the percentage in the general population), which is why I didn't mention them. Maybe we still need more. I'll have to think about this.) There will always be selection biases. Human populations vary on all sorts of dimensions, including interest in things like social psychology or football. For example, maybe ex-military types will be less interested in being social psychologists (though I think we might be surprised – understanding human behavior is somewhat central to military life, and the nature of leadership and performance are constant areas of focus.) I think we could do a lot to make our field more inviting to diverse groups. The culture of social psychology is far too narrow and specific. I don't think a good social science can be so culturally specific (in our case, white/Asian urban liberals, overwhelmingly) – I think the very nature of social science requires breadth and diversity on multiple dimensions. The out-of-nowhere sibling rivalry discovery made me think about this again, how my personality, my background, my birth order, and my gender had made me blind to a major envy elicitor... Klotzbach and Landsea have published an interesting new paper in Journal of Climate on the frequency and percentage of Category 4-5 hurricanes.

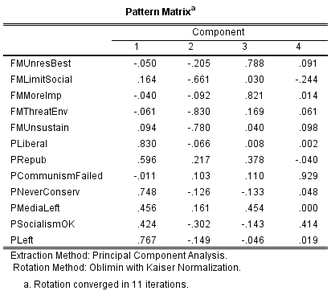

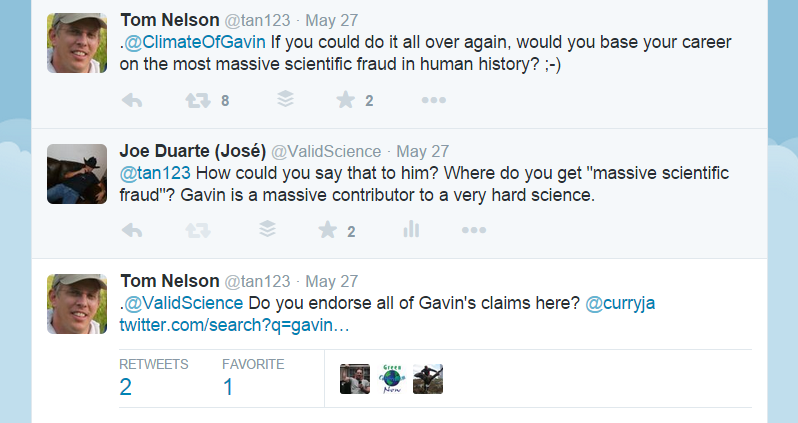

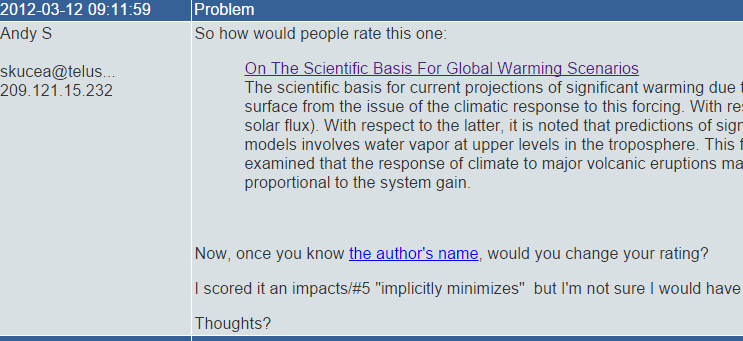

Title: Extremely Intense Hurricanes: Revisiting Webster et al. (2005) After 10 Years Abstract: Webster et al. (2005) documented a large and significant increase in both the number as well as the percentage of Category 4-5 hurricanes for all global basins from 1970-2004, and this manuscript examines if those trends have continued when including ten additional years of data. In contrast to that study, as shown here, the global frequency of Category 4-5 hurricanes has shown a small, insignificant downward trend while the percentage of Category 4-5 hurricanes has shown a small, insignificant upward trend between 1990 and 2014. Accumulated Cyclone Energy globally has experienced a large and significant downward trend during the same period. We conclude that the primary reason for the increase in Category 4-5 hurricanes noted in observational datasets from 1970 to 2004 by Webster et al. was due to observational improvements at the various global tropical cyclone warning centers, primarily in the first two decades of that study. So, not much of a trend, though I think 2015 stats might change the results. What's most interesting to me is that you won't read about this study in the media. Well, you probably won't. I think we can say with near certainty that Scientific American will not report this. Justin Gillis at the New York Times will not report it. Chris Mooney will not report it. Ars Technica will not report it. Probably none of the major news outlets will report it other than perhaps Fox News. But they will report anything to do with more hurricanes, and anyone linking it to AGW. Well, we're ruining the test by calling them out. One of these outfits might actually report it as a result of being called out, but I doubt it. Something I've been thinking about lately is how mediated and constructed our realities are. We've done a lot of work on this in terms of how a person frames events, for example in Cognitive Behavioral Therapy. We know how powerful a person's perspective and framing can be. That alone has implications for how we deal with extremely subtle statistical realities like climate change. (Well, one can see them as subtle – or not. That's the point.) But in this case I'm talking about exogenous factors – the information that is made available to people. There are a number of quality studies by noted climate scientists and oceanographers that would appear to dampen worries about climate change, or at least catastrophic climate change. However, these papers appear to be virtually ignored by the media. That is interesting. There's a swath of reality that is being blacked out by major media outlets, and another swath that is being amplified and emotionalized. I think this is clear, and ideally wouldn't be controversial across the political spectrum. What concerns me is that we may not be dealing with a purely political spectrum at this point. There are many reasons for a social scientist to wonder if environmentalism operates as a religion at both psychological and sociocultural levels of analysis. This question deserves a lot of research. So far, little of it has been conducted, likely due to the current popularity of environmentalism in academic culture, including social science. I think it's clear with specific individuals that environmentalism is their religion (see the Pachauri quote below) – the question is how common this is. Environmentalists are unlikely to be homogeneous. Perceived reality is such a malleable thing. Lots of people know this, and lots of people use this fact. The whole profession of PR and publicists feeds on it, and it deeply disturbs me to see publicists employed in scientific bodies like AAAS. PR and science are not compatible. Right now we seem to have a shortage of science writers in general, and an acute shortage of science writers who cover climate and are not also staunch environmentalists. That's an ethical oversight by their employers. If anything is "unsustainable", getting our science through such a biased filter must be. I don't have solutions yet, but this issue should be thoroughly researched. If environmentalism is in fact a religion, this becomes an even bigger problem. We must avoid getting our science from and through a religion, at all costs. Afterword For those of you who are environmentalists, well first, welcome. I assume the idea that environmentalism could be a religion might strike you as absurd. Well, some of you may be fine with viewing it as a religion, but I expect most would not be fine with it, for a number of reasons. I haven't given you any details on what I'm thinking about, what my hypotheses might be, how we could navigate this issue and determine, scientifically, if environmentalism is a religion (or similar to one, or something else.) I don't blame you for being offended. It will be some time before we have sizable data on this question, and we need more researchers involved. IPCC head Rajendra Pachauri was quite explicit about his religious motivations in his resignation letter: For me the protection of Planet Earth, the survival of all species and sustainability of our ecosystems is more than a mission. It is my religion and my dharma. I also see a lot of viciousness and cultism in the movement that I don't see as much on other political issues. Greenpeace actually published the following on their website: The proper channels have failed. It's time for mass civil disobedience to cut off the financial oxygen from denial and scepticism. If you're one of those who believe that this is not just necessary but also possible, speak to us. Let's talk about what that mass civil disobedience is going to look like. If you're one of those who have spent their lives undermining progressive climate legislation, bankrolling junk science, fuelling spurious debates around false solutions and cattle-prodding democratically-elected governments into submission, then hear this: We know who you are. We know where you live. We know where you work. And we be many, but you be few. This is clearly a threat. Greenpeace's explanation for the post suggests that they don't disown violence and threats: We realise it might have sounded threatening to some. This is why we have explained over and over that it is NOT a threat of violence, that Greenpeace doesn't endorse violence, it is not a campaign tactic and never will be. Juliette It might have sounded threatening to some? They've explained over and over that it's not a threat? Talk about treating reality as malleable. They don't understand that they don't get to change the reality that it was a threat simply by decree. The man said "We know where you live." I don't think I knew before now that Greenpeace is a wink-wink pro-violence organization. It's deeply worrisome that some of the "science" writers that filter climate science for us happily associate with Greenpeace. (I'd be interested to know if there have been any acts of violence reported against individuals who publicly opposed environmentalism. There was some violence or at least vandalism in California against people who had donated to the anti-gay-marriage proposition. The donation records were public, with addresses apparently. I suppose the gay marriage issue might be another case of very strong emotions and not much formal argumentation or play of ideas.) I don't think we see this kind of behavior so much on the income tax debate, ObamaCare, drugs, etc. People aren't motivated to threaten others so much for being pro-tax-cut. There seem to be sacred values at work – perhaps religious ones – when people do what Greenpeace did. There are specific concepts of nature and sustainability that environmentalists subscribe to, and perhaps ecological stasis could be said to be a sacred value, since cost-benefit analyses on climate mitigation/adaptation are so controversial to them. There is an intolerance for disagreement that we see when people form tribes that insulate themselves from the views and motivations of out-groups. For example, I think it would be very difficult for staunch environmentalists to understand why someone might admire the Koch brothers for their achievements and agree with their funding choices – their language around the Koch brothers suggests that they think it's self-evident that disagreeing with environmentalism is evil. I don't think they contemplate that people might value and admire productiveness, entrepreneurship, enterprise and so forth in much the same way that environmentalists might value a small carbon footprint. One thing that's clear is that environmentalism offers signaling opportunities like nothing we've ever seen. Christians and Muslims have never been able to chronically signal their virtue or status as believers by buying a certain kind of car. There's never been a Christian Prius. Or Christian detergent and paper towels and shopping bags. Chronic signaling of one's virtue does not define religion, but that should give you something to think about. There are some distinctive features here. (Updated main body of post on October 27, 2015: Cut the tangents about trigger warnings, which didn't fit, and cut grumpy parts about academia, which were needless distractions (and too grumpy.))  The Lewandowsky, Gignac, and Oberauer paper in PLOS ONE has been substantially corrected. I had alerted the journal last fall that there were serious errors in the paper, including the presence of a 32,757-year-old in the data, along with a 5-year-old and six other self-reported minors. The paleoparticipant in particular had knocked out the true correlation between age and the conspiracy belief items (the authors had reported that there was no correlation between age and those items.) See my original essay on this paper, what the bad data did to the age correlations, and lots of other issues here. Deeply troubling issues remain. The authors have been inexplicably unwilling to remove the minors from their data, and have in fact retained two 14-year-olds, two 15-year-olds, a 16-year-old, and a 17-year-old. This is strange given that the sample started with 1,001 participants. It is also wildly unethical. To provide some context, here's the timeline: October 4, 2013: A layperson alerted Lewandowsky – on his own website – that there was a 32,757-year-old and a 5-year-old in his data. There was no correction. Recall that he had reported analyses that included age variable in the paper, and that these analyses were incorrect because of the 32,757-year-old. August 18, 2014: On the PLOS ONE page for the paper, I alerted the authors to the 32,757-year-old, the 5-year-old, and the six other minors in their data (along with several other problems with the study.) There was no correction. September 22, 2014: I contacted PLOS ONE directly and reported the issue. I had waited over a month for the authors to correct their paper after the notification on August 18, but they had mysteriously done nothing, so it was time to contact the journal. August 13, 2015: Finally, a correction was published. It is comprehensive, as there were many errors in their analyses beyond the age variable. I'd like to pause here to say that PLOS ONE is beautiful and ethically distinctive. They insisted that the authors publish a proper correction, and that it thoroughly address the issues and errors in the original. They also placed a link to the correction on top of the original paper. The authors did not want to issue a proper correction. Rather, Lewandowsky preferred to simply post a comment on the PLOS ONE page for the paper and call it a corrigendum. This would not have been salient to people reading the paper on the PLOS ONE page, as it requires that one click on the Comments link and go into the threads. Notably, Lewandowsky's "corrigendum" was erroneous and required a corrigendum of its own... It was also strangely vague and uninformative. A serious ethical issue remains – they kept the minors in their data (except the 5-year-old.) They had no prior IRB approval to use minors, nor did they have prior IRB approval to waive parental consent. In fact, the "ethics" office at the University of Western Australia appears to be trying to retroactively approve the use of minors as well as ignoring the issue of parental consent. This is ethically impossible, and wildly out of step with human research ethics worldwide. It also cleanly contradicts the provisions of the Australian National Statement on Ethical Conduct of Human Research (PDF). In particular, it contradicts paragraphs 4.2.7 through 4.2.10, and 4.2.12. The conduct of the UWA ethics office is consistent with all their prior efforts to cover up Lewandowsky's misconduct, particularly with respect to Lewandowsky's Psych Science paper, which should be treated as a fraud case. UWA has refused everyone's data requests for that paper, and has refused to investigate. Corruption is serious problem with human institutions, one that I increasingly think deserves a social science Manhattan Project to better understand and ameliorate. UWA is a classic case of corruption, one that mirrors those reported by Martin. Here is the critical paragraph regarding minors in the PLOS ONE correction: "Several minors (age 14–17) were included in the data set for this study because this population contributes to public opinions on politics and scientific issues (e.g. in the classroom). This project was conducted under the guidelines of the Australian National Health and Medical Research Council (NH&MRC). According to NH&MRC there is no explicit minimum age at which people can give informed consent (as per https://www.nhmrc.gov.au/book/chapter-2-2-general-requirements-consent). What is required instead is to ascertain the young person’s competence to give informed consent. In our study, competence to give consent is evident from the fact that for a young person to be included in our study, they had to be a vetted member of a nationally representative survey panel run by uSamp.com (partner of Qualtrics.com, who collected the data). According to information received from the panel provider, they are legally empowered to empanel people as young as 13. However, young people under 15 are recruited to the panel with parental involvement. Parental consent was otherwise not required. Moreover, for survey respondents to have been included in the primary data set, they were required to answer an attention filter question correctly, further attesting to their competence to give informed consent. The UWA Human Rights Ethics Committee reviewed this issue and affirmed that “The project was undertaken in a manner that is consistent with the Australian National Statement of Ethical Conduct in Human Research (2007).” The above may be difficult for people to parse and unpack. Here are the essentials we can extract from it: 1. There was no prior IRB approval for the use of minors. (UWA's review was retroactive, amazingly.) 2. Parental consent was not obtained for minors who were at least 15 years of age. 3. Obtaining parental consent for 13 and 14-year-olds was delegated to a market research company. However, the term "consent" is not used in this case. Rather, the authors claim that the market research company recruited these kids with "parental involvement". It's not clear what this term means. 4. The UWA "ethics" committee is attempting to grant retroactive approval for the use of minors and the lack of parental consent, as well as the delegation of consent obtainment to a market research company. They cite the National Statement of (sic) Ethical Conduct in Human Research, even though it contains no provision for retroactive approvals or cover-ups. In fact, the Statement does not contemplate such absurdities at all. 5. In the opening sentence, they claim they intended to use minors. I don't believe that for a minute. If they intended to use minors, they would have sought IRB approval to do so (before conducting their study.) Second, if they intended to use minors, they would not have only seven minors. Third, there is no mention of minors in the original paper, nor any mention of this notion of high school classrooms as the public square. Facts 1 through 4 are revolutionary. This is an ethical collapse. Researchers worldwide would be stunned to hear of this. No IRB approval for the use of minors? No parental consent? A new age threshold of 15 for parental consent, and 13 for participation? Delegating parental consent to a market research company? An IRB acting as a retroactive instrument? An IRB covering up the unapproved use of minors? I'm not sure we've ever encountered any one of these things. Having all of these happen at the same time is a singularity, an ethical event horizon that dims the sun. Notably, their citation of the NH&MRC page is a sham. The page makes no mention of age or minimum ages. It ultimately defers to Chapter 4.2, which takes for granted that there is IRB approval to use minors, as well as parental consent. (See the Respect and Standing Parental Consent sections.) It does not contemplate a universe where IRB approval is not obtained. It's extremely disturbing that staff at UWA would try to deceive the scientific community with a sham citation. I contacted UWA about these issues some months ago. As far as I can tell, they refuse to investigate. It's as though their ethics office is specifically designed to not investigate complaints if they think they can escape scrutiny and legal consequences. Mark Dixon of the UWA anti-ethics office said the following in an e-mail: "However, this project was designed for a general demographic. Surveys targeted to a general population do not prohibit the collection of data from minors should they happen to respond to the survey." "You are probably aware that the survey written up in the article was an online survey, where consent is indicated by the act of taking the survey." "Inclusion or omission of outliers, such as the '5 year old' and the '32,000 year old', are reasonable scholarship when accompanied by explanatory notes. However, it would be unusual to actually delete data points from a data-set, so I don't understand your concern about the remaining presence of such data-points in the data-set." "You expressed concern that the survey “… did not even ask participants for their ages until the end of the study, after participation and any "consent" had been secured". Demographic information is routinely collected at the end of a survey. This is not an unusual practice." To say that these statements are alarming is an understatement. He thinks research ethics doesn't apply to online studies. He thinks we don't need to obtain consent for online studies, that simply participating is consent. He thinks 5-year-olds and 32,757-year-olds are "outliers" and that it is reasonable to retain them (is he aware that the age variable was analyzed?) He thinks researchers can ask someone's age at the end of a study. This person retains the title "Associate Director (Research Integrity)", yet he appears to know nothing of research or research integrity. The best explanations here are that he has no training in human research ethics and/or he's corrupt. This is such an extraordinary case. For lay readers, let me note the following: 1. An online study is a study like any other study. The same research ethics apply. There's nothing special about an online study. Whether someone is sitting in front of a computer in a campus lab, or in their bedroom, the same ethical provisions apply. 2. We always require people to be at least 18 years of age, unless we are specifically studying minors (which would require explicit IRB approval). 3. We always include a consent form or information letter at the start of an online study. This form explains the nature of the study, what participants can expect, how long it should take, what risks participation may pose to the participant, any compensation they will receive, and so forth. Notably, the form will explicitly note that one must be at least 18 to participate. 4. We always ask age up front, typically the first page after a person chooses to participate (after having read the consent or information sheet.) 5. We always validate the age field, such that the entered age must be at least 18 (and typically we'll cap the acceptable age at 99 or so to prevent fake ages like 533 or 32,757.) All modern survey platforms offer this validation feature. A person cannot say that they are 5 years old, or 15 years old, and proceed to participate in an IRB-approved psychology study (where approval to use minors was not granted.) We can't usually do anything about people who lie about their ages (relatedly, I doubt the 5-year-old age was accurate, but it won't matter in the end.) This has nothing to do with online studies. The manner in which people report their age is the same in online and in-person studies – they're seated in front of a computer and self-report their age. But if someone tells us that they are minors, their participation ends at that moment. Because of this, there should never be minors or immortals in our data. If we wanted to card people, we could – both in-person and online (see Coursera's ID validation) – but there's a trade-off between age verification and anonymity. 6. Note that DIxon's first claim contradicts the Correction's claim that they intended to use minors. Note also that Dixon's claim reduces to: If approval to use minors is not obtained, that's when you're allowed to use minors. He's saying that in a study not intended for minors, you're allowed to have them in your data. That would open an interesting and catastrophic loophole – we could always use minors simply by not asking for approval to use them... At this point, I think PLOS ONE should just retract the paper. The paper is invalid anyway, but we can't have unapproved – or retroactively approved – minors in our research. UWA is clearly engaged in a cover-up, and their guidance should not inform PLOS ONE's, or any journal's, decisions. This exposes a major structural ethical vulnerability we have in science – we rely on institutions with profound conflicts of interest to investigate themselves, to investigate their own researchers. We have broad evidence that they often attempt to cover up malpractice, though the percentages are unclear. Journals need to fashion their own processes, and rely much less on university "finders of fact". We should also think about provisioning independent investigators. The standards in academic science are much lower than in the private sector (I used to help companies comply with Sarbanes-Oxley.) In any case, UWA's conduct deserves to be escalated and widely exposed, and it will be. This is far from over – we can't ignore the severity of the ethical breaches here, and we won't. I doubt minors were harmed in this case, but institutions that would do what these people did are at risk of putting minors – and adults – in harm's way. Throwing out the rules regarding minors in research is a trivial recipe for a long list of bad outcomes, as is clinging to the nonsense that research ethics don't apply to online studies. These people are a disgrace and a menace. APPENDIX A: UWA Personnel Who Were Alerted to the Minors Murray Maybery, Head of the Department of Psychology: I contacted him on August 20, 2014, informing him of the minors in the data, as well as other issues. He did not respond. Vice-Chancellor Paul Johnson: I contacted him (and Maybery again) on January 19, 2015, informing them of the minors, and requesting the full data. Neither of these men responded. I tried again on February 15, 2015, and Maybery pointed me to the stripped data file for the related Psych Science study, essentially mocking my explicit request for the full data (I had made clear that the available data was stripped, yet he still referred me to it without comment. There's some real ugliness in Perth.) Everyone knows about the stripped data (Lewandowsky stripped the age variable from the Psych Science study data file, along with other variables like gender and his Iraq War conspiracy item, an item whose inclusion weakens his theories – the paper says his minimum cutoff age was 10. He won't release the full data.) Maybery ignored my reports on the presence of minors, had nothing to say about it. Johnson never responded at any point, and was revealed to be cartoonishly corrupt in a similar episode. More recently, he was in the media for revoking Bjorn Lomborg's job offer because Lomborg likes to do cost-benefit analyses on climate change mitigation, and this is evidently controversial. This whole situation is extraordinary – only once before in my life have I encountered corruption that stilled and chilled me the way UWA has. I used to be a Court Appointed Special Advocate (CASA) for abused, abandoned, or neglected children in CPS care, and I'll write about an episode from that experience soon. I'll update the list as events dictate, and document everything more thoroughly in other venues. I'll publish similar reports on Psych Science and APS' conduct when informed of minors and other issues, and in other venues. I haven't posted much on these issues lately because my own work comes first, but I would never let these issues go – I prefer to be slow and methodical on these issues anyway, to give people a long time to do their jobs, to take my time to understand what I'm looking at, etc. APPENDIX B: QUICK VALIDITY NOTE The study was invalid because of the low quality and bias of the items, as well as the fact that some of the authors' composite variables don't actually exist. In social psychology, our constructs don't always exist in the sense that trees, stars, and the Dallas Cowboys exist. They're often abstractions that we infer based on sets of survey items or other lower-level data. I could put together a scale and call it Free Market Views, but that doesn't necessarily mean I'm measuring free market views. It has to be validated. People should read my items and make sure they actually refer to free market views. They should make sure my items are not biased. They should make sure that my items are clear, single-barrelled, and understandable to virtually all participants. They should run factor analyses to see if my items are measuring the same thing, or perhaps measuring more than one thing. Well, I should do all those things, but so should other people. Journalists should be mindful of this reality: Just because someone says they've measured free market views, and says that "free market views" predict this and that, doesn't make it so. Remember, this is not like trees, stars, and the Dallas Cowboys. You need due diligence. You need to look at someone's items and data and think about it, reason about it. So what happened here? Well, there were five purported free market items. A simple factor analysis reveals that there are two strong factors in those five items. The strongest factor would credibly be called Environmentalism. The following items load on this factor: Free and unregulated markets pose important threats to sustainable development. The free market system is likely to promote unsustainable consumption. The free market system may be efficient for resource allocation but it is limited in its capacity to promote social justice. The second factor carries these items: An economic system based on free markets unrestrained by government interference automatically works best to meet human needs. The preservation of the free market system is more important than localized environmental concerns. Both factors include environmentalist items, but the first factor includes two of them, those two items load more heavily on that factor than the third item does, and that factor explains 43 percent of the variance in scores, while the second factor explains 25 percent. The second factor is distinct in carrying what I'd call the extremist items: unrestrained free markets "automatically work best" to meet (unspecified) "human needs"; and preservation of free markets is more important than (unspecified) localized environmental concerns. The whole scale is a mess because the items are a mess. For example, this item is not only double-barrelled (which we avoid), but the barrells point in opposite directions (which I've never seen before): The free market system may be efficient for resource allocation but it is limited in its capacity to promote social justice. Which barrell wins? It's too complicated on several grounds. The term "social justice" is a leftist concept. The rest of us don't use it, don't grant its conception of justice, its definition of the good. In other words, not everyone thinks social justice is just. Perhaps more importantly, people who don't use the term might not know what it means. Lastly, agreement with the double-barrelled item was scored as disagreement with free markets, yet I suspect many pro-market types would agree with it (if they know what social justice entails.) The scale in general is politically biased. It's written in the language of the left, particularly in the language of environmentalists, with concepts like "sustainability" — another abstraction that non-environmentalists will not necessarily grant as a valid or useful concept, or even understand. It's not nearly as bad the invalid SDO and RWA scales, but it's decently bad. We could never assume we're getting a clean read on free market attitudes with this scale. It's got too much environmentalism, left-wing language, and poor item construction. It should never be used. We don't really know what we're looking at when we see a correlation between this scale and some other scale or view – our best guess is that we're looking at the relation between environmentalism and Y. Here's the pattern matrix from a PCA with Direct Oblimin rotation, eigenvalues greater than one. I included all the political items, not just the purported "free market" scale, because we can't assume a priori how we should slice them. It looks like we'd drop the communism item (it's probably on an island because no one talks about communism anymore) and perhaps the socialism item for similar reasons. This is stuff that researchers would normally do before reporting, or even analyzing, substantive results. Evidently, Lewandowsky, Gignac, and Oberauer didn't do the work – they just asserted composite variables that include the items they want to include, but that's not normally how social psychology works: Substantive note: The correction does not address one of the substantive errors in the original. Gender is the largest predictor of GMO attitudes. They never reported this, but rather implied that gender did no work. A lot of times boring variables like age and gender explain a lot of variance, and in this case gender explained more than any other. (Women trusted GMOs less, using Lewandowsky's primitive linear correlations on the scale index. It's unclear whether women actually distrusted GMOs – i.e. where the women clustered on the items. A correlation doesn't tell you this. A bad researcher would say "women distrusted GMOs" given a negative correlation coefficient, without specifying descriptives or their actual, substantive placement on the scale, which could in fact be pro-GMO, just less pro than men.) The Karl et al. study highlights something I've been thinking about lately. I don't know if the Karl paper is important, good, or bad. It claims to debunk the slowdown in surface warming. Other papers will claim the opposite. This won't be the end of it, but imagine that it was – imagine that we saw a decisive breakthrough in climate science, or a series of them, that debunked the slowdown, and another body of work that settled on 3.1 °C for ECS.

If you're a climate skeptic, or better yet, a person who is currently skeptical of burdensome future human-caused warming, you should be ready not to be. You're presumably skeptical because of issues you see with the evidence, levels of certainty or uncertainty, perhaps features of climate science and its methods or predictive track record. All of those issues can in theory be resolved by new evidence, or new types of evidence and methods. If you're a skeptic or a lukewarmer I wouldn't assume that the evidence is going to roll your way. (ECS seems to have had a bit of a downward run over the last few years, but who knows.) You should be ready for anything, evidence-wise. Don't get too comfortable. Life is full of surprises, and so is nature. Earth's climate answers to no one. It will do whatever it does. It is completely uncoupled from our desires, agendas, elections, ideologies, beliefs, arguments, pride, etc. I don't think people should hitch their ideological wagons to the behavior of a planetary climate system. That's odd. This applies to everyone of course. Scientific notes: 1. Measuring surface temperatures sure is complicated. In fact, as Gavin Schmidt said, global mean temperature isn't measured per se. It's estimated. Scientists can come along in 2015 and redo the temperature estimates for the past several decades. That's strange. Most sciences don't work that way, don't have this constant process of re-estimation of past measured variables. If scientists can redo temperature estimates in 2015, they can presumably redo them in 2016, and 2017, and perhaps in 2023. I think we need to understand this better. Maybe they're closing in on maximum feasible bias reduction and we won't see much adjustment in the future, but this should be explained. 2. Knowing about or believing in human-caused climate change is nothing like knowing about gravity or that the earth is not flat. This is not like looking at something and seeing that it's there, or figuring out the horizon, or dropping a ball. It's so much more complicated, driven by inferential estimates and wicked statistics. Climate activists should be much less mean to skeptics, and stop trying to treat this issue as though people are obligated to march to the claims of a young, complex, and revisionist science. I don't think people are obligated to believe in things they cannot observe or confirm directly except in special circumstances. Believing in everything the media folds under "science" is probably unwise, and it's unclear how a rational knower is supposed to navigate our media/science culture. I don't have any kind of prescription. Caring The eternal caveat applies: The science is just the science. It doesn't have to matter to you, not politically, not philosophically or personally. People get to choose their political philosophies and ethical systems, and you don't need to catastrophize any arbitrary level of future adversity if you don't want to. You don't have to care about the science of obesity, or the science of testicular cancer, or the science of sadness, or an increase in storm count. There are lots of things a person could choose to care about or not care about, and it's unclear why anyone has to care about any particular science or diffuse future risks. There's a mindset in modern politics that wants to "Do Something!" about everything. I think we'll find that some of it is driven by affluence – that people worry about more things, smaller things, the more affluent a society becomes. In any case a person's quality of life is powerfully shaped by their perspective and framing – we know how profound that can be, the glass half full vs. half empty mindset. It's strange that we never seem to apply that wisdom to environmental issues. You could put me on the gulf coast and jack up the hurricane count by a third, and I wouldn't care if I had someone to love and books to read. There are so many other things going on in a human life than weather and sea levels, so much more beyond material and economic concerns. Some people (not me) would move to Mars if they had a chance, even though the climate would be so hostile that they'd be confined to quarters. That's not just about affluent American space geeks – most people in the world don't care about climate change, even when forced to choose six "priorities" in a biased UN survey. The UN wouldn't let me participate in the survey because I couldn't find six things on their list that were priorities to me. The list is framed from a top-down, government-centric bias that enjoins people to express vague wishes for "better" roads, health care, food, and so forth. They don't offer priorities like "end the drug war", "deregulate immigration", "cut taxes", "eliminate income taxes", "free market healthcare", or "get the UN out of my life." It was designed for the UN to be able to say that adults around the world want governments to deliver things like "affordable and nutritious food" and "action on climate change". The items and forced choices will systematically discriminate against non-leftist participants, as well as people who don't think there are lots of problems they need authority figures to solve – such people won't even be allowed to submit their answers. The stated goal of the survey is "that global leaders can be informed as they begin the process of defining the new development agenda for the world", what economist William Easterly would call the "Tyranny of Experts". And still, even with the rigged design, people don't choose climate change. Relatedly, Bjorn Lomborg was correct to say that Pacific Islanders don't care about it. (Choose Oceania in the dropdown.) Pretty much no one does. It doesn't make the cut on any continent or region that they list. As for affluence, start with the Low HDI countries option and work your way up – the poorest countries care the least. As per my hypothesis above, more people care as you work up HDI, yet it never makes the cut even in the richest. I didn't know that until today. I thought environmentalism was more popular than this, but I now realize that I probably just know a lot of environmentalists. Correction:

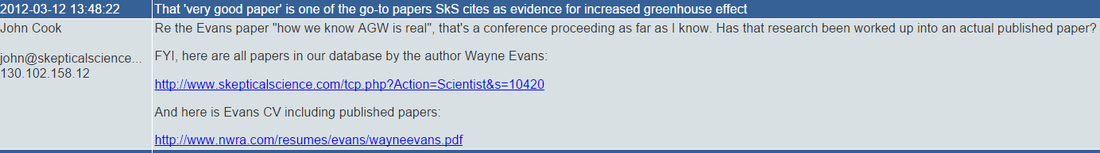

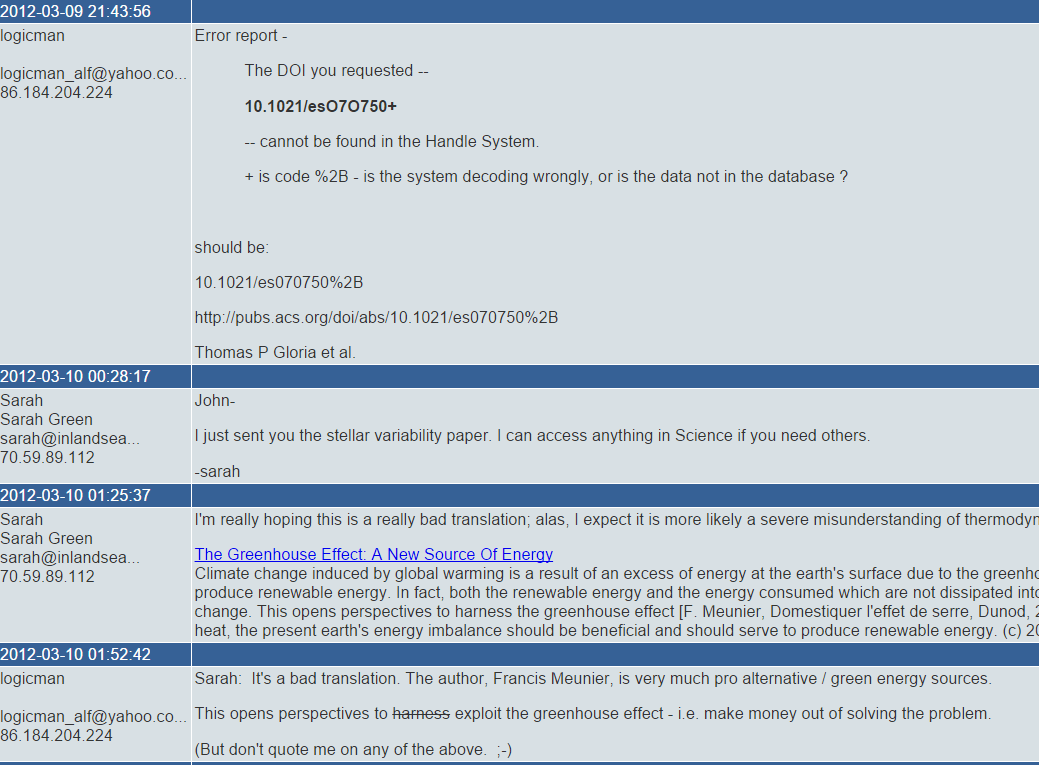

In one of my posts on the Cook fraud, I listed 19 social science, survey, marketing, and opinion papers that were coded as "climate papers" endorsing of anthropogenic warming. I made a mistake listing this one: Vandenplas, P. E. (1998). Reflections on the past and future of fusion and plasma physics research. Plasma physics and controlled fusion, 40(8A), A77. It was coded as mitigation but not as endorsement. My apologies. Counting: Since we have a 1:1 replacement policy at JoseDuarte.com, I'll give you another paper that was indeed counted as endorsement: Larsson, M. J. K., Lundgren, M. J., Asbjörnsson, M. E., & Andersson, M. H. (2009). Extensive introduction of ultra high strength steels sets new standards for welding in the body shop. Welding in the World, 53(5-6), 4-14. In fact, this one was counted as a "mitigation" paper and as "explicit endorsement." As I said in that post, there are lots more where that came from, lots more that I haven't listed. I don't provide them all for two reasons: 1) I want to get people thinking about validity and discourage something I saw after I posted that list of 19 papers – counting and recomputation of meaningless data. 2) I'm saving some for the journal article. The Cook study was invalid because of the search procedure, the resulting arbitrary dataset that gave some scientists far more votes than others, the subjective raters having a conflict of interest (which is unheard of), the basic design, the breaking of rater blindness and independence, etc. That means there is nothing to count, no percent, not a 97%, not any percent. You could recompute the percentage as 70% or 90% or 30% if you went through the data, but it would still be meaningless. There is no method known to science by which we could take their set of papers and compute a climate science consensus. I need to do a better job of explaining what it means to say that a study is invalid. People have this instinct to still play with data, any data, because it's there, like Mt. Everest. It's an unfortunate artifact of human nature and first-mover advantage, especially in cases where lax journals don't act swiftly to retract. As always, I want to stress that the study was a fraud, and that this is a completely separate issue from validity. I always remind people of this because I think it would be irresponsible to pretend that is wasn't. They lied about their method. They claimed their ratings were blind to author and independent – they routinely broke blindness and independence in a forum with other raters. Lying about your methods is fraud. That alone makes the study go away. There's no counting to be done. The above welding paper is an example of the third act of fraud – saying that these were climate papers. There are all kinds of these absurd papers in their 97%. This was never real. There was never a 97%. I heard someone say something like: "If you don't like a study, run your own to debunk it." That's a common outlook in science, and it's good advice in most cases. In this case, it's inappropriate, bad scientific epistemology. Invalid studies, certainly fraudulent ones, never impose a burden on others go out and collect data to debunk it. Invalid research should simply be retracted and everyone should carry on with their lives as if the research never happened, because in a sense, it didn't. Fraud of course should be retracted with prejudice. In such cases, there's nothing to refute or debunk with new data – you'd be swinging at air. If we want to know the consensus, we already know it from quality surveys of climate scientists (it's 78 - 84%), so running around doing another study to "refute" the Cook study would be silly (especially a study based on subjective ratings of abstracts.) Sure, some people believe the 97% figure right now – Cook and company were good at the media angle. That will be corrected in time, this study will be dealt with, and I expect the people involved will face appropriate consequences. A few days ago on Twitter I said that climate skeptics needed to distance themselves from the conspiracy theorists and harassers. This upset a dozen or so climate skeptics and led to a flurry of rebukes that I had trouble following due to their sheer volume and the constraints of a 140-character medium. I can't coherently respond or clarify my thoughts on Twitter itself, so I'll do so here. Since joining Twitter, I've posted variously on science, social science, climate science, statistical methods, fraud cases, interesting articles in the Atlantic or New Yorker, and the catch that was definitely a catch. Most of my tweets (oh God) on climate issues over this period would have been more satisfying for climate skeptics than environmentalists. The Cook 97% fraud case has been a popular issue with skeptics, and understandably so. The climate science consensus has been systematically overestimated by untrained researchers, fraud, invalid methods, failure of peer review, and an ancient enemy of science that I will elaborate upon in upcoming peer-reviewed literature. I've occasionally reminded people that I'm not a climate skeptic, and I try to cleanly separate climate science from estimates of the consensus from policy issues and so on. These are all very different things to me. Because I've called out the Cook fraud, people seem to have sorted me into the skeptic camp, including skeptics themselves. That's a mistake. My perceived scolding of skeptics was prompted by this:  I know of no body of evidence that would lead a reasonable person to conclude that climate science is the most massive scientific fraud in history. This whole conspiracy issue has come up a lot recently as a potential personality disposition. I think it's worth lingering on what would have to be true for the science of human-caused climate change to be a massive fraud. First, a lot of people would have to be in on it, virtually all climate scientists that work on the issue and the boundaries of the issue. I think for that to be true would in turn require both an unrealistic model of human psychology and an unrealistic model of how science works. If it were a fraud, one ethical and capable climate scientist could blow it open. Even a capable outsider like Steve McIntyre could blow it open. What do I mean? If CO2 doesn't cause warming, or if the true value of ECS was 0.1 °C or something, and the field was hiding this fact, this would be discoverable. Someone could report their analyses revealing the truth. Assume that they couldn't get into journals because the field is a fraud and they censor any off-message findings. They could post it online. Most people wouldn't believe it at first, would do the whole source/peer-review harrumphing. But it would certainly circulate among climate skeptics and eventually climate scientists would have to confront it. Republicans in Congress would start asking them about it and so on. Has that happened? Not to my knowledge. Where is there a finding or analysis that debunks the AGW hypothesis? Instead, all we see are lower estimates of human forcing, ECS, model quality, etc. When Judith Curry argues against climate science orthodoxy and overconfidence, she's not saying it's all false. Her scientific work offers lower estimates of ECS, for example (Lewis and Curry, 2014). Steve McIntyre has never argued that the hypothesis is false, or offered any debunking of it. His work has been focused on specific issues with specific papers and methods. If it were all a hoax, I think McIntyre would have been both inclined and able to expose it some years ago. If this is about disagreeing with the magnitude or confidence of prevailing climate science estimates, for example where they project a 3 or 4 °C warming and you think it's 1 or 2 °C, then that's not a fraud issue. That's disagreement. You can argue bias, tribalism, or political ideology as factors driving their estimates, but that's a long way from fraud. Fraud is willful deception. My vehemence against the fraud cases I discovered should not be confused for a loose definition of fraud. When I say "the Cook fraud", I mean concrete acts of fraud, like claiming this: When they did this: They lied. The ratings were not independent. They asked each other for feedback on their ratings, undermining both the validity of the ratings and the ability to compute rater reliability. Authors and journal identities were not hidden, which was essential for a subjective rating study – they were freely disclosed. They routinely broke researcher blindness, and routinely outed scientists who were friendly or hostile to their cause, sometimes even by "smell". In fact, the entire papers were freely distributed, shattering any notions of blindness. The first author himself broke protocol and blindness, identifying the authors of papers. And they all worked at home, so they could pull up the whole paper and break blindness anytime they wanted. We would panic if researchers broke blindness in a biomedical study – e.g. the person handing out placebo or treatment pills to patients. If the researchers in a double-blind study knew what condition the patient was in, what kind of pill or other treatment they were giving them, it could affect the study. They might interact differently with patients if they knew their condition, and those differences could have downstream consequences, like for example the patient inferring what group they were in from the researcher's confidence or other cues. Or something about the researcher's administration of more complex treatments could change were blindness broken. In any case, if a biomedical study claimed that the researchers were blind, and it turned out they weren't, that study would be retracted in a heartbeat. Everyone would get it. This is much worse. This is a subjective rating study where the researchers read textual material and made complex inferences from it. Given the nature of the topic, blindness to author and journal was critical, as would be the procurement of qualified neutral raters situated in a controlled environment on campus, not activists working from home. Breaking blindness in this kind of study is much more serious than for a pill handler. And lying about researcher blindness is the crux of the fraud issue in both cases. That's what fraud is. They knew they had this forum where they routinely broke independence and blindness, yet claimed their ratings were independent and blind. Show me that kind of fraud in climate science, and then we'll talk. Bias isn't fraud. Disagreement isn't fraud. Lying is fraud, but you can't just assume that people who disagree with you are lying. People on Twitter complained that I had called out skeptics unfairly, that climate activists or scientists behave worse. I don't like that kind of blame shifting. First, we have to keep in mind that human communication operates under lots of constraints. It's sequential and somewhat discrete. We can usually only say one thing at a time. This is especially true on Twitter. It's not reasonable to expect that when I criticize skeptics I'm also going to put it in context by criticizing environmentalists. Grown-ups should be moderately tough and resilient. Ethically, we should be open to criticism of our groups, our friends, ourselves. We know, a priori, that some of that criticism will be true (over the course of a human life.) There are features of human language that shape the ways we communicate on these and other issues. The content of present communication is usually much more salient than past communication, past experiences, knowledge of a person's character, etc. And that's just one class of constraints. For the last two years, I've thought about how we could create new languages that would enable humans to communicate classes of concepts that are difficult or impossible with current languages, as well as overcome some of the temporal and salience issues. Linguistics, constructed languages, and human concept-formation and reasoning are deeply interesting topics. Eventually I think we'll have better ways of communicating, including constructed languages, but that's a very long-arc projection (50 - 200 years). It's useful to step back and consider the constraints in how we communicate with each other. That's a tangent, but some of these constraints impact our increased political polarization. Another tangent: It's fun to think about less ambitious ways to improve communication, like evolutions of Wolfram Alpha's Computable Document Format. |

José L. DuarteSocial Psychology, Scientific Validity, and Research Methods. Archives

February 2019

Categories |

RSS Feed

RSS Feed