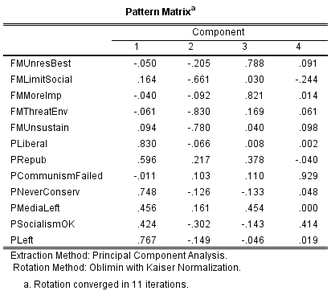

The Lewandowsky, Gignac, and Oberauer paper in PLOS ONE has been substantially corrected. I had alerted the journal last fall that there were serious errors in the paper, including the presence of a 32,757-year-old in the data, along with a 5-year-old and six other self-reported minors. The paleoparticipant in particular had knocked out the true correlation between age and the conspiracy belief items (the authors had reported that there was no correlation between age and those items.) See my original essay on this paper, what the bad data did to the age correlations, and lots of other issues here. Deeply troubling issues remain. The authors have been inexplicably unwilling to remove the minors from their data, and have in fact retained two 14-year-olds, two 15-year-olds, a 16-year-old, and a 17-year-old. This is strange given that the sample started with 1,001 participants. It is also wildly unethical. To provide some context, here's the timeline: October 4, 2013: A layperson alerted Lewandowsky – on his own website – that there was a 32,757-year-old and a 5-year-old in his data. There was no correction. Recall that he had reported analyses that included age variable in the paper, and that these analyses were incorrect because of the 32,757-year-old. August 18, 2014: On the PLOS ONE page for the paper, I alerted the authors to the 32,757-year-old, the 5-year-old, and the six other minors in their data (along with several other problems with the study.) There was no correction. September 22, 2014: I contacted PLOS ONE directly and reported the issue. I had waited over a month for the authors to correct their paper after the notification on August 18, but they had mysteriously done nothing, so it was time to contact the journal. August 13, 2015: Finally, a correction was published. It is comprehensive, as there were many errors in their analyses beyond the age variable. I'd like to pause here to say that PLOS ONE is beautiful and ethically distinctive. They insisted that the authors publish a proper correction, and that it thoroughly address the issues and errors in the original. They also placed a link to the correction on top of the original paper. The authors did not want to issue a proper correction. Rather, Lewandowsky preferred to simply post a comment on the PLOS ONE page for the paper and call it a corrigendum. This would not have been salient to people reading the paper on the PLOS ONE page, as it requires that one click on the Comments link and go into the threads. Notably, Lewandowsky's "corrigendum" was erroneous and required a corrigendum of its own... It was also strangely vague and uninformative. A serious ethical issue remains – they kept the minors in their data (except the 5-year-old.) They had no prior IRB approval to use minors, nor did they have prior IRB approval to waive parental consent. In fact, the "ethics" office at the University of Western Australia appears to be trying to retroactively approve the use of minors as well as ignoring the issue of parental consent. This is ethically impossible, and wildly out of step with human research ethics worldwide. It also cleanly contradicts the provisions of the Australian National Statement on Ethical Conduct of Human Research (PDF). In particular, it contradicts paragraphs 4.2.7 through 4.2.10, and 4.2.12. The conduct of the UWA ethics office is consistent with all their prior efforts to cover up Lewandowsky's misconduct, particularly with respect to Lewandowsky's Psych Science paper, which should be treated as a fraud case. UWA has refused everyone's data requests for that paper, and has refused to investigate. Corruption is serious problem with human institutions, one that I increasingly think deserves a social science Manhattan Project to better understand and ameliorate. UWA is a classic case of corruption, one that mirrors those reported by Martin. Here is the critical paragraph regarding minors in the PLOS ONE correction: "Several minors (age 14–17) were included in the data set for this study because this population contributes to public opinions on politics and scientific issues (e.g. in the classroom). This project was conducted under the guidelines of the Australian National Health and Medical Research Council (NH&MRC). According to NH&MRC there is no explicit minimum age at which people can give informed consent (as per https://www.nhmrc.gov.au/book/chapter-2-2-general-requirements-consent). What is required instead is to ascertain the young person’s competence to give informed consent. In our study, competence to give consent is evident from the fact that for a young person to be included in our study, they had to be a vetted member of a nationally representative survey panel run by uSamp.com (partner of Qualtrics.com, who collected the data). According to information received from the panel provider, they are legally empowered to empanel people as young as 13. However, young people under 15 are recruited to the panel with parental involvement. Parental consent was otherwise not required. Moreover, for survey respondents to have been included in the primary data set, they were required to answer an attention filter question correctly, further attesting to their competence to give informed consent. The UWA Human Rights Ethics Committee reviewed this issue and affirmed that “The project was undertaken in a manner that is consistent with the Australian National Statement of Ethical Conduct in Human Research (2007).” The above may be difficult for people to parse and unpack. Here are the essentials we can extract from it: 1. There was no prior IRB approval for the use of minors. (UWA's review was retroactive, amazingly.) 2. Parental consent was not obtained for minors who were at least 15 years of age. 3. Obtaining parental consent for 13 and 14-year-olds was delegated to a market research company. However, the term "consent" is not used in this case. Rather, the authors claim that the market research company recruited these kids with "parental involvement". It's not clear what this term means. 4. The UWA "ethics" committee is attempting to grant retroactive approval for the use of minors and the lack of parental consent, as well as the delegation of consent obtainment to a market research company. They cite the National Statement of (sic) Ethical Conduct in Human Research, even though it contains no provision for retroactive approvals or cover-ups. In fact, the Statement does not contemplate such absurdities at all. 5. In the opening sentence, they claim they intended to use minors. I don't believe that for a minute. If they intended to use minors, they would have sought IRB approval to do so (before conducting their study.) Second, if they intended to use minors, they would not have only seven minors. Third, there is no mention of minors in the original paper, nor any mention of this notion of high school classrooms as the public square. Facts 1 through 4 are revolutionary. This is an ethical collapse. Researchers worldwide would be stunned to hear of this. No IRB approval for the use of minors? No parental consent? A new age threshold of 15 for parental consent, and 13 for participation? Delegating parental consent to a market research company? An IRB acting as a retroactive instrument? An IRB covering up the unapproved use of minors? I'm not sure we've ever encountered any one of these things. Having all of these happen at the same time is a singularity, an ethical event horizon that dims the sun. Notably, their citation of the NH&MRC page is a sham. The page makes no mention of age or minimum ages. It ultimately defers to Chapter 4.2, which takes for granted that there is IRB approval to use minors, as well as parental consent. (See the Respect and Standing Parental Consent sections.) It does not contemplate a universe where IRB approval is not obtained. It's extremely disturbing that staff at UWA would try to deceive the scientific community with a sham citation. I contacted UWA about these issues some months ago. As far as I can tell, they refuse to investigate. It's as though their ethics office is specifically designed to not investigate complaints if they think they can escape scrutiny and legal consequences. Mark Dixon of the UWA anti-ethics office said the following in an e-mail: "However, this project was designed for a general demographic. Surveys targeted to a general population do not prohibit the collection of data from minors should they happen to respond to the survey." "You are probably aware that the survey written up in the article was an online survey, where consent is indicated by the act of taking the survey." "Inclusion or omission of outliers, such as the '5 year old' and the '32,000 year old', are reasonable scholarship when accompanied by explanatory notes. However, it would be unusual to actually delete data points from a data-set, so I don't understand your concern about the remaining presence of such data-points in the data-set." "You expressed concern that the survey “… did not even ask participants for their ages until the end of the study, after participation and any "consent" had been secured". Demographic information is routinely collected at the end of a survey. This is not an unusual practice." To say that these statements are alarming is an understatement. He thinks research ethics doesn't apply to online studies. He thinks we don't need to obtain consent for online studies, that simply participating is consent. He thinks 5-year-olds and 32,757-year-olds are "outliers" and that it is reasonable to retain them (is he aware that the age variable was analyzed?) He thinks researchers can ask someone's age at the end of a study. This person retains the title "Associate Director (Research Integrity)", yet he appears to know nothing of research or research integrity. The best explanations here are that he has no training in human research ethics and/or he's corrupt. This is such an extraordinary case. For lay readers, let me note the following: 1. An online study is a study like any other study. The same research ethics apply. There's nothing special about an online study. Whether someone is sitting in front of a computer in a campus lab, or in their bedroom, the same ethical provisions apply. 2. We always require people to be at least 18 years of age, unless we are specifically studying minors (which would require explicit IRB approval). 3. We always include a consent form or information letter at the start of an online study. This form explains the nature of the study, what participants can expect, how long it should take, what risks participation may pose to the participant, any compensation they will receive, and so forth. Notably, the form will explicitly note that one must be at least 18 to participate. 4. We always ask age up front, typically the first page after a person chooses to participate (after having read the consent or information sheet.) 5. We always validate the age field, such that the entered age must be at least 18 (and typically we'll cap the acceptable age at 99 or so to prevent fake ages like 533 or 32,757.) All modern survey platforms offer this validation feature. A person cannot say that they are 5 years old, or 15 years old, and proceed to participate in an IRB-approved psychology study (where approval to use minors was not granted.) We can't usually do anything about people who lie about their ages (relatedly, I doubt the 5-year-old age was accurate, but it won't matter in the end.) This has nothing to do with online studies. The manner in which people report their age is the same in online and in-person studies – they're seated in front of a computer and self-report their age. But if someone tells us that they are minors, their participation ends at that moment. Because of this, there should never be minors or immortals in our data. If we wanted to card people, we could – both in-person and online (see Coursera's ID validation) – but there's a trade-off between age verification and anonymity. 6. Note that DIxon's first claim contradicts the Correction's claim that they intended to use minors. Note also that Dixon's claim reduces to: If approval to use minors is not obtained, that's when you're allowed to use minors. He's saying that in a study not intended for minors, you're allowed to have them in your data. That would open an interesting and catastrophic loophole – we could always use minors simply by not asking for approval to use them... At this point, I think PLOS ONE should just retract the paper. The paper is invalid anyway, but we can't have unapproved – or retroactively approved – minors in our research. UWA is clearly engaged in a cover-up, and their guidance should not inform PLOS ONE's, or any journal's, decisions. This exposes a major structural ethical vulnerability we have in science – we rely on institutions with profound conflicts of interest to investigate themselves, to investigate their own researchers. We have broad evidence that they often attempt to cover up malpractice, though the percentages are unclear. Journals need to fashion their own processes, and rely much less on university "finders of fact". We should also think about provisioning independent investigators. The standards in academic science are much lower than in the private sector (I used to help companies comply with Sarbanes-Oxley.) In any case, UWA's conduct deserves to be escalated and widely exposed, and it will be. This is far from over – we can't ignore the severity of the ethical breaches here, and we won't. I doubt minors were harmed in this case, but institutions that would do what these people did are at risk of putting minors – and adults – in harm's way. Throwing out the rules regarding minors in research is a trivial recipe for a long list of bad outcomes, as is clinging to the nonsense that research ethics don't apply to online studies. These people are a disgrace and a menace. APPENDIX A: UWA Personnel Who Were Alerted to the Minors Murray Maybery, Head of the Department of Psychology: I contacted him on August 20, 2014, informing him of the minors in the data, as well as other issues. He did not respond. Vice-Chancellor Paul Johnson: I contacted him (and Maybery again) on January 19, 2015, informing them of the minors, and requesting the full data. Neither of these men responded. I tried again on February 15, 2015, and Maybery pointed me to the stripped data file for the related Psych Science study, essentially mocking my explicit request for the full data (I had made clear that the available data was stripped, yet he still referred me to it without comment. There's some real ugliness in Perth.) Everyone knows about the stripped data (Lewandowsky stripped the age variable from the Psych Science study data file, along with other variables like gender and his Iraq War conspiracy item, an item whose inclusion weakens his theories – the paper says his minimum cutoff age was 10. He won't release the full data.) Maybery ignored my reports on the presence of minors, had nothing to say about it. Johnson never responded at any point, and was revealed to be cartoonishly corrupt in a similar episode. More recently, he was in the media for revoking Bjorn Lomborg's job offer because Lomborg likes to do cost-benefit analyses on climate change mitigation, and this is evidently controversial. This whole situation is extraordinary – only once before in my life have I encountered corruption that stilled and chilled me the way UWA has. I used to be a Court Appointed Special Advocate (CASA) for abused, abandoned, or neglected children in CPS care, and I'll write about an episode from that experience soon. I'll update the list as events dictate, and document everything more thoroughly in other venues. I'll publish similar reports on Psych Science and APS' conduct when informed of minors and other issues, and in other venues. I haven't posted much on these issues lately because my own work comes first, but I would never let these issues go – I prefer to be slow and methodical on these issues anyway, to give people a long time to do their jobs, to take my time to understand what I'm looking at, etc. APPENDIX B: QUICK VALIDITY NOTE The study was invalid because of the low quality and bias of the items, as well as the fact that some of the authors' composite variables don't actually exist. In social psychology, our constructs don't always exist in the sense that trees, stars, and the Dallas Cowboys exist. They're often abstractions that we infer based on sets of survey items or other lower-level data. I could put together a scale and call it Free Market Views, but that doesn't necessarily mean I'm measuring free market views. It has to be validated. People should read my items and make sure they actually refer to free market views. They should make sure my items are not biased. They should make sure that my items are clear, single-barrelled, and understandable to virtually all participants. They should run factor analyses to see if my items are measuring the same thing, or perhaps measuring more than one thing. Well, I should do all those things, but so should other people. Journalists should be mindful of this reality: Just because someone says they've measured free market views, and says that "free market views" predict this and that, doesn't make it so. Remember, this is not like trees, stars, and the Dallas Cowboys. You need due diligence. You need to look at someone's items and data and think about it, reason about it. So what happened here? Well, there were five purported free market items. A simple factor analysis reveals that there are two strong factors in those five items. The strongest factor would credibly be called Environmentalism. The following items load on this factor: Free and unregulated markets pose important threats to sustainable development. The free market system is likely to promote unsustainable consumption. The free market system may be efficient for resource allocation but it is limited in its capacity to promote social justice. The second factor carries these items: An economic system based on free markets unrestrained by government interference automatically works best to meet human needs. The preservation of the free market system is more important than localized environmental concerns. Both factors include environmentalist items, but the first factor includes two of them, those two items load more heavily on that factor than the third item does, and that factor explains 43 percent of the variance in scores, while the second factor explains 25 percent. The second factor is distinct in carrying what I'd call the extremist items: unrestrained free markets "automatically work best" to meet (unspecified) "human needs"; and preservation of free markets is more important than (unspecified) localized environmental concerns. The whole scale is a mess because the items are a mess. For example, this item is not only double-barrelled (which we avoid), but the barrells point in opposite directions (which I've never seen before): The free market system may be efficient for resource allocation but it is limited in its capacity to promote social justice. Which barrell wins? It's too complicated on several grounds. The term "social justice" is a leftist concept. The rest of us don't use it, don't grant its conception of justice, its definition of the good. In other words, not everyone thinks social justice is just. Perhaps more importantly, people who don't use the term might not know what it means. Lastly, agreement with the double-barrelled item was scored as disagreement with free markets, yet I suspect many pro-market types would agree with it (if they know what social justice entails.) The scale in general is politically biased. It's written in the language of the left, particularly in the language of environmentalists, with concepts like "sustainability" — another abstraction that non-environmentalists will not necessarily grant as a valid or useful concept, or even understand. It's not nearly as bad the invalid SDO and RWA scales, but it's decently bad. We could never assume we're getting a clean read on free market attitudes with this scale. It's got too much environmentalism, left-wing language, and poor item construction. It should never be used. We don't really know what we're looking at when we see a correlation between this scale and some other scale or view – our best guess is that we're looking at the relation between environmentalism and Y. Here's the pattern matrix from a PCA with Direct Oblimin rotation, eigenvalues greater than one. I included all the political items, not just the purported "free market" scale, because we can't assume a priori how we should slice them. It looks like we'd drop the communism item (it's probably on an island because no one talks about communism anymore) and perhaps the socialism item for similar reasons. This is stuff that researchers would normally do before reporting, or even analyzing, substantive results. Evidently, Lewandowsky, Gignac, and Oberauer didn't do the work – they just asserted composite variables that include the items they want to include, but that's not normally how social psychology works: Substantive note: The correction does not address one of the substantive errors in the original. Gender is the largest predictor of GMO attitudes. They never reported this, but rather implied that gender did no work. A lot of times boring variables like age and gender explain a lot of variance, and in this case gender explained more than any other. (Women trusted GMOs less, using Lewandowsky's primitive linear correlations on the scale index. It's unclear whether women actually distrusted GMOs – i.e. where the women clustered on the items. A correlation doesn't tell you this. A bad researcher would say "women distrusted GMOs" given a negative correlation coefficient, without specifying descriptives or their actual, substantive placement on the scale, which could in fact be pro-GMO, just less pro than men.)

12 Comments

|

José L. DuarteSocial Psychology, Scientific Validity, and Research Methods. Archives

February 2019

Categories |

RSS Feed

RSS Feed