|

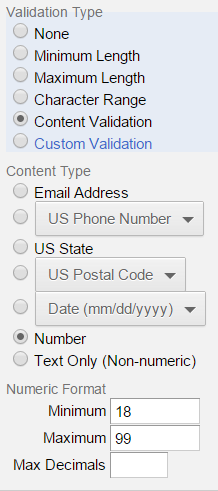

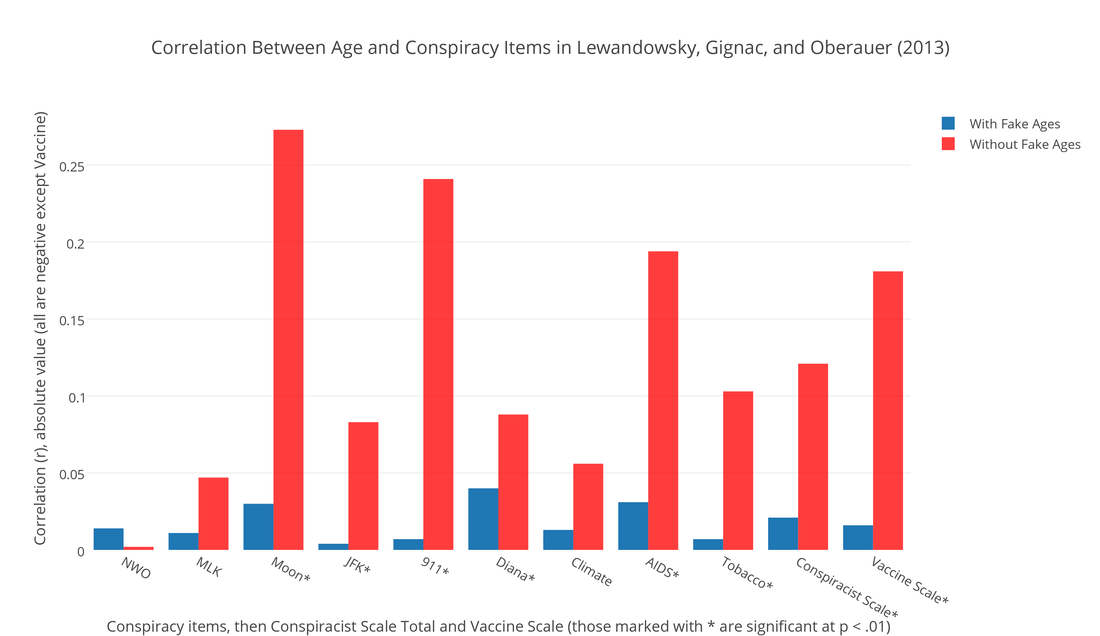

Lewandowsky, Gignac, and Oberauer (2013) authored "The Role of Conspiracist Ideation and Worldviews in Predicting Rejection of Science" in PLOS ONE. (Paper here.) This study has many of the same features as their Psychological Science scam. In that study, they falsely linked belief in the moon-landing hoax to climate skepticism when in fact only three participants out of 1145 held both of those beliefs, and over 90% of climate skeptics in their sample rejected the moon-landing hoax. In the PLOS ONE study, we see the same broken conspiracy items, e.g. the New World Order item erroneously refers to the NWO as a group, the JFK item doesn't describe much of a conspiracy, the free market items are written from a leftist perspective, using proprietary leftist terminology. The validity of this study would be in doubt regardless of the results. A much more serious problem, however, is that there is bad data in the sample. Most consequentially, there is a 32,757-year-old, a veritable paleo-participant. (Data here.) There are also seven minors, including a 5-year-old and two 14-year-olds, two 15-year-olds, and two others. They were alerted to the presence of the minors and the paleo-participant over a year ago, and did nothing. This would be a serious problem in any context. We cannot have minors or paleo-participants in our data, in the data we use for analyses, claims, and journal articles. It's even more serious given that the authors analyzed the age variable, and reported its effects. They state in their paper: --- "Age turned out not to correlate with any of the indicator variables." This is grossly false. It can only be made true if we include the fake data. If we remove the fake data, especially the 32,757-year-old, age correlates with most of their variables. It correlates with six of their nine conspiracy items, and with their "conspiracist ideation" combined index. It also correlates with views of vaccines – a major variable in their study. See the graph below.  See the full Plotly graph here. (By "fake ages", I mean that the 32,757 age is presumably fake, and I would assume the 5-year-old is not in fact a precocious 5-year-old who somehow got through the uSamp.com / Qualtrics participant pool that the authors used. As we get into the 14- and 15-year-olds in the sample, it's easier to imagine these might be true ages, and I think we become very concerned about the possibility of actual minors in the sample.) (As noted in the graph, all the correlations between age and conspiracy theories were negative, perhaps contrary to common stereotypes.) It's highly unusual to have out-of-range ages, especially five-digit ages, in survey data obtained electronically. Any of the online survey systems we use will validate the age field for us. That is, they won't accept an invalid age. No one should be able to say that they're 5 years old, or 32,757, and proceed to participate in an IRB-approved psychology study. The authors apparently used Qualtrics. I use it all the time. When building a survey, you customarily set the age validation right there on the side panel, like so: What's even more concerning is that the authors reported the median age (43), and even the quartiles, in the paper. And as noted above, they analyzed age in relation to other variables. It's difficult to understand how any researcher would know the median age, quartiles, and correlations with other variables, but not encounter the mean age, which was 76. That mean would immediately set off alarms for any researcher using normal population samples. Any statistical software is going to show the mean as part of a standard set of descriptive statistics. (The mean after removing the fakes and minors, is 43.) It's also hard to imagine how they did not notice the 5-year-old, or the 32,757-year-old, which is the outlier responsible for the inflated mean age. Min and max values are given by default in most descriptive statistics outputs. That one data point – the paleo-participant – is almost single-handedly responsible for knocking out all the correlations between age and so many other variables. If you just remove the paleo-participant, leaving the minors in the data, age lights up as a correlate across the board. Further removing the kids will strengthen the correlations. What concerns me the most is that these researchers were alerted that their data was bad on October 4, 2013 and did nothing about it. A commenter posted directly on Lewandowsky's webpage where he had announced the paper, a mere two days after the announcement: "Additional problems exist as well. For example, one respondent claims an age of 32,757 years, and another claims an age of 5. Do you believe this data set should be used as is, despite these obvious problems?" Almost a year later, on August 18, 2014, I posted a comment directly the PLOS ONE page for their paper, and noted the bogus age data¹. They've known for a very long time that there is a 32,757-year-old in their data, along with a 5-year-old, two 14-year-olds, two 15-year-olds and two other minors, and they've known that they reported analyses on the age variable in their study. They did nothing. I think it's safe to assume that they've known for quite some time that the above-mentioned claim is completely false: "Age turned out not to correlate with any of the indicator variables." A 32,757-year-old will grossly inflate the mean and corrupt the deviation scores and SD – any trained researcher would know that this could severely impact any correlation analyses. Some of their other effects seem to hold, but the coefficients are smaller controlling for age. However, I would not take any of their findings seriously given that:

I don't understand how anyone could let a paper just sit there if they know the data is bad and specific claims in the paper are false. No credible social psychologist would simply do nothing upon discovering that there were minors in their data, or a five-digit age. I'd be running to my computer to confirm any report that claims I'd made in a peer-reviewed journal article rested on bad data, fake participants, etc. I wouldn't be able to sleep if I knew I had something like that out there, and would have to retract the paper or submit a corrected version. You can't just leave it there, knowing that it's false. Their behavior is beyond strange at this point. The best case scenario here is that Lewandowsky is the worst survey researcher we'll ever see. My undergraduates do far better work than this. This is ridiculous. There's more to the story. They also claimed "although gender exhibited some small associations, its inclusion as a potential mediator in the SEM model (Figure 2) did not alter the outcome." Gender cannot be a mediator between these variables, since gender is usually pre-assigned and somewhat fixed, so I don't know what they're talking about. In any case, gender is strongly associated with both views of vaccines and views of GMO. It is the strongest predictor of views of GMO, out of all the variables in the study. It remains so in an SEM model with all their preferred variables included. (The effects are in opposite directions – women are more negative on GMOs and more positive toward vaccines than men.) In any case, something is very wrong here. The authors should explain how the 32,757-year-old got into their data. They should explain how minors got into their data. They should explain why they did nothing for more than a year. This is a very simple dataset – it's a simple spreadsheet with 42 columns, about as simple as it gets in social science. It shouldn't have taken more than a few days to sort it out and run a correction, retraction, or whatever action the circumstances dictated. These eight purported participants allowed them to claim that age wasn't a factor. It allowed them to focus on the glitzy political stuff, allowed them to focus on finding something negative to pin on conservatives. They don't tell you until late in the paper that conservatism is negatively correlated with belief in conspiracies – the exact opposite of what they claimed in the earlier scam paper that APS helped promote. Also note that we already know from much higher quality research that Democrats are more likely than Republicans to believe in the moon hoax, though it's a small minority in both cases (7% vs. 4%), and that Democrats endorse every version of the JFK conspiracy at higher rates. I think some journals might be unaware that the pattern of these conspiracy beliefs across the left-right divide is already well-documented by researchers who have much higher methodological standards – professional pollsters at Gallup, Pew, et al. We don't need junky data from politically-biased academics when we already have high-quality data from professionals. Which brings us back to the previous paper. APS extended that scam in their magazine, fabricating completely new and false claims that were not made in the paper at all, such as that free market endorsement was positively correlated with belief in an MLK assassination conspiracy and the moon-landing hoax. Neither of these claims is true. The data showed the exact opposite for the MLK item (which we already knew from real and longstanding research) – free market endorsement predicted rejection of that conspiracy, r = -.144, p < .001. And there was no correlation at all between free market views and belief in the moon-landing hoax, r = .029, p = .332. APS just made it up... They smeared millions of people, a wide swath of the public, attaching completely false and damaging beliefs to them. They've so far refused to run a correction. It's unconscionable and inexplicable. The Dallas Morning News has much higher standards of integrity and truthfulness than the Association for Psychological Science. I don't understand how this is possible. This whole situation is an ethical and scientific collapse. I'm drafting a longer magazine piece about this and related scams, especially the role of journals and organizations like APS, IOP, and AAAS in promoting and disseminating fraudulent science. This situation is beyond embarrassing at this point. If anything were to keep me from running the magazine piece, it's that it's so embarrassing, as a member of the field, to report that this junk can actually be published in peer-reviewed journals, that no one looks at the data, and that a left-wing political agenda will carry you a long way and insulate you from normal standards of scientific conduct and method. This reality is not what I expected to find when I chose to become a social scientist. I'm still struggling to frame it. Normally, the host institution would investigate reports of fraud or misconduct, but the system appears to be broken. Lewandowsky has not been credibly investigated by the University of Western Australia. They've even refused data requests because they deemed the requester overly critical of Lewandowsky. That's stunning – I've never heard of a university denying a data request by referring to the views of the requester. UWA seems to have exited the scientific community. Science can't function if data isn't shared, if universities actively block attempts to uncover fraud or falsity in their researchers' work. To this day Lewandowsky refuses to release his data for the junk moon hoax study. That's completely unacceptable, and there is no excuse for Psychological Science and APS to retain that paper as though it has some sort of epistemic standing – we already know that it's false, and the authors won't release the data, or even the national origin, age, or gender of the participants. It's ridiculous to have a system that depends entirely on one authority to investigate misconduct, especially an authority that will have a conflict in interest, as a host university often will. It puts everything on one committee or even one individual, dramatically reducing the likelihood of clean inquiries. The way journals and scientific bodies have tried to escape any responsibility is unconscionable, and completely unsustainable long-term. I'm enormously disappointed with people like Eric Eich and APS head Alan Kraut for failing to act against Lewandowsky's earlier scam, and in the latter case, for failing to retract the fabricated false effects in the Observer magazine. Falsely linking millions people to the belief that the moon-landing was a hoax was an incredibly vicious thing for the authors to do, and for APS to do. Eich, Kraut, and that whole body should take participants' welfare – and that of their cohort in the public at large – a hell of a lot more seriously. I'm stunned by how little they care about the impact such defamation can have on human lives, and how willing they are to harm conservatives. Imagine how a person might be treated if people thought he or she believed the moon landings were a hoax. We have a responsibility to conduct ethical research, and to not publish false papers. They inexcusably failed to act on the discovery that numerous claims in the earlier paper were false, that the sample was absurdly invalid and unusable, and that there are likely minors in the Psychological Science data (the authors said in the paper that their cutoff age was 10, and neither the journal nor APS have responded to me on that issue, nor have they released the data – Lewandowsky refuses to release the data, and removed the age variable and lots of other data from the data file they've made available. Refusal to release data should lead to the automatic retraction of a paper.) I'm very confused by the lack of concern about minors in the data. I don't know if this is controversial, but we can't have minors in our data. This is true at several levels. First, we would need specific IRB approval to have minors participate in a study – the study would have be focused on children, not some web survey asking about three different assassinations. Second, we don't want minors in our studies for scientific reasons – we don't want to make claims about human behavior, claims that are implicitly centered on adult human behavior, based on non-adult data. Third, it's illegal to secure the participation of minors in research without their parents' consent. This PLOS ONE study purportedly used an American sample, and I think the same legal concerns would apply in Australia. I'm still stunned that APS doesn't care about minors in the Psych Science data – that was something I'd expect any journal to care a great deal about. The ethical issues are much larger than those we normally face. If the minors in the data are real, that's a problem, and we couldn't use them. If they were adult participants who gave fake ages, then that's also a problem, and we couldn't use them, certainly not in analyses involving age. If they were not participants at all, then that's obviously a problem as well. The authors should explain how this data came to be – they should've done this over a year ago. In any case, I hope that PLOS ONE makes a better showing than APS, and I'm confident they will. They know about the issue, and are investigating. We can't have minors and 32,757-year-olds in our data, and we certainly can't make false claims based on such bad data. Enough with the scams. ¹ NOTE: I just added (Jan 8, 2015) the information about the October 4, 2013 disclosure of the minors and 32,757-year-old. Brandon Shollenberger was the whistleblower (username Corpus in that comment on Lewandowsky's website.) I've always known that I was not the original discoverer of the minors and the paleo-participant – my earlier draft stating that the authors knew since August 18, 2014 was charitable. In fact, I wasn't the first person to point any of the major issues with Lewandowsky's recent publications. It was people outside of the field – laypeople, bloggers and the like, who discovered the lack of moon hoax believers in the moon hoax paper, who pointed out that the participants were recruited from leftist blogs, that they could be anyone from any country of any age, none of which has been disclosed, etc. Throughout this whole saga, it was laypeople who upheld basic scientific, ethical, and epistemic standards. The scientists, scholars, editors, and authorities have been silent or complicit in the malicious smearing of climate skeptics, free marketeers, and other insignificant victims. I was late to the party. Also note that this business has been going on for some time. In 2011, Psychological Science published a bizarre sole-authored Lewandowsky paper where he investigated whether laypeople project the pause in global warming to continue. It's one of the strangest papers I've ever seen. He had two graphs/conditions – one of stock prices and one of global temperatures. He touted a significant difference in the slopes participants extrapolated onto the graphs – they evidently projected a higher slope to the temps compared to the stock prices. This is supposed to mean something, apparently. But...ah... the graphs were labeled: - Share Price SupremeWidget Corporation - NASA - GISS An obviously fake corporation name vs... NASA. I don't understand what's happening here. I feel like I stepped into something I fundamentally do not understand. Psychological Science?

66 Comments

"Their behavior is beyond strange at this point"

Reply

1/6/2015 04:53:36 am

I really shouldn't be surprised by anything that comes with Lewandowsky's name attached and yet somehow I still am. I think it's that others let him carry on with this sort of garbage.

Reply

1/6/2015 05:32:30 am

This is the third paper in which Lewandowsky has made statements which he knew to be false long before publication. In the pre-published version of the APS “Moon Hoax” paper made available in July 2012, he claimed that the survey had been publicised at the SkepticalScience blog. Barry Woods asked him about it privately and Lewandowsky lied, saying he knew it had been, since he had the URL somewhere. I asked John Cook of SkepticalScience about it independently in September 2012 and Cook lied to me. Then Cook told the truth to Lewandowsky around November 2012 in an email uncovered via FOI by Simon Turnill. The paper was published unchanged in June 2013. I had previously written to Psychological Science about it, and editor in chief Professor Eich told me he was forwarding my letter to Lewandowsky and said he'd get back to me with his remarks. He never did.

Reply

Mark Pawelek

1/6/2015 05:58:37 am

They don't seem very competent or caring people to me. They should've detected the fake outliers straight away. These professors may need to go back to school!

Reply

But Jose', don't you appreciate that the age old conspiracy to make you look like a conspiracy theorist has salted the historical record with erstwhile conservatives sounding like generic fools on unconroversial matters of science , and put some of them to bed with PR hacks and focus groups to compound the problem?

Reply

Barry Woods

1/6/2015 06:35:56 am

LOG12 (or is it Log13)

Reply

Barry Woods

1/6/2015 06:47:14 am

From the LOG12 paper (Psychological science)

Reply

Doug Proctor

1/6/2015 06:56:31 am

The Weapons of Mass Destruction lie and it's absolute lack of blowback demonstrated a long time ago that the politically useful untruth is immune from complaint.

Reply

Barry Woods

1/6/2015 07:38:58 am

From PLOS One -

Reply

John M

1/8/2015 03:49:18 am

Well, that's pretty much the point. In fact, most people won't even read the whole article, nevermind looking at the paper or data, but just the headline and maybe the first few paragraphs. That's also why part of the game is to get a politically useful headline and why articles bury the details and mention of any uncertainty or weaknesses deep into the article near the bottom, where few people will bother to read it.

Reply

DocBud

1/6/2015 07:58:41 am

José, you still have one instance of 35,757 in the post:

Reply

Joe Duarte

1/6/2015 11:17:03 am

Thanks. Fixed.

Reply

MikeInMinnesota

1/6/2015 08:17:52 am

I guess I just assumed the age problems were a result of a bug in the random number software they were using to generate their data.

Reply

1/6/2015 08:23:22 am

Doug Proctor: “The Weapons of Mass Destruction lie and it's absolute lack of blowback demonstrated a long time ago that the politically useful untruth is immune from complaint”

Reply

1/11/2015 10:37:42 pm

Andy,

John M

1/6/2015 10:01:32 am

Let me offer an explanation for the 32,757 value and how it wound up in the data (though this explanation in no way excuses it not being scrubbed from the data before it was analyzed). As this page explains...

Reply

Joe Duarte

1/6/2015 11:16:39 am

Interesting point. But a max 16-bit INT would be off by 10. It's 32,757, so the max minus 10?

Reply

John M

1/6/2015 11:32:55 am

If age was entered at an integer, it's possible it was a person seeing how high of an integer they could enter and that's where they stopped. That it's off by 10 is interesting, but that it matches on all of the other digits suggests some relationship to that limit somehow to me. If you use that website, maybe you could test a number field and see what the highest value you can enter is. It's possible the programmers set the interface limit to 10 less than the internal maximum. I'd also be curious to know what it does to negative ages.

Anon

1/6/2015 09:11:32 pm

If it was writted to file as a signed integer, and -9 was used as a missing data flag, but then later read from the file as an unsigned integer, you might end up with that value.

dean_1230

1/12/2015 01:23:29 am

One other option, however remote this may be, is that they're using a spreadsheet and the "date" formatting and it's converting the date to the integer. In this case, 32757 in Excel is the integer form of the "short date" September 6th, 1989.

John M

1/6/2015 10:29:12 am

"Their behavior is beyond strange at this point."

Reply

1/6/2015 07:34:50 pm

That's good. Only another how many now for him to acknowledge?

Reply

Joe Duarte

1/7/2015 01:19:21 am

He should've acknowledged it in August when he was alerted to it. It doesn't matter to acknowledge it now. It should never have happened -- it's absolutely inexcusable to have a five-digit age and a bunch of minors in your published data. This is garbage.

Reply

Barry Woods

1/7/2015 03:58:48 am

It serves his purpose...

James

1/8/2015 04:35:23 pm

I'd support this comment. I've been a professional market researcher for over 30 years, and often looked askance at academic survey research. But Lew is in a league of his own, his work is unbelievably bad.

Sheri

1/7/2015 02:43:58 am

It seems likely that Lew believes as long as he acknowledges the error, says he's sorry, then no one will care and he can continue on his current track. That seems to work for much of the political arena now. Just be angry that something happened, say you're sorry or that you'll investigate and then pretend the whole thing did not happen. Lew seems quite political.

Reply

Barry Woods

1/7/2015 04:07:47 am

In the LOG 12 (NASA faked the Moon landing, therefore (climate ) science is a hoax ) paper - it says less than 10 yr old and greater than 95 yr old responses were excluded.

Gary

1/7/2015 04:01:46 am

I suspect that age was calculated from date of birth data entered by the survey participants. In the data I work with daily I frequently see incorrectly entered dates. The very first thing I do is locate and flag such errors. It's the peak of incompetence not to examine raw data before doing any analysis when the frequent occurrence of such errors is well known. Peer reviewers share equal blame with the author for not finding the problem.

Reply

osseo

1/7/2015 06:44:52 am

Gary, it was peer reviewed? How old were the reviewers?

Reply

classical_hero

1/10/2015 07:07:13 am

They must be dinosaurs, to allow an age that old.

RogueElement451

1/8/2015 12:39:46 am

It is absolutely incredible that such lame ,(can I say lame?)

Reply

RomanM

1/8/2015 02:57:19 am

As in his earlier paper, Lewandowsky failed to do even the simplest quality control checks on the remaining respondents who managed to notice the trick question "catch1". People will sometimes answer without even reading the questions or use patterns to quickly finish a survey. This appears to be the case for a substantial number of individuals in Lewandowsky's opus.

Reply

1/9/2015 06:57:03 am

RomanM

Reply

RomanM

1/9/2015 11:42:13 pm

Geoff, you ask:

RomanM

1/9/2015 11:46:07 pm

Looks like HTML formatting doesn't work and that the number of lines is restricted. I'll try posting the table elsewhere and link to it.

RomanM

1/10/2015 12:49:01 am

Table available here:

Joe Duarte

1/10/2015 01:26:28 pm

Yes, sorry Roman, HTML does not work in the comments on Weebly-hosted websites apparently. It will work on the new website, which will go live any day now.

Joe Duarte

1/9/2015 07:28:14 am

Good work sir.

Reply

Jeff Id

1/8/2015 11:23:25 am

Jose,

Reply

Stephen Pruett

1/9/2015 07:42:28 am

This is particularly disheartening and disgusting to me as a department head for a biomedical-research oriented department. We spend untold hours on ethics training, responsible conduct of research training, IRB approvals, IACUC approvals, Biosafety approvals, lab safety inspections, fire safety inspections, etc., etc., etc. These have all been presented to me as absolutely mandatory, and I have been told that failure to comply is potentially a career ending act. Yet, we have in this post a description of one person blatantly ignoring numerous ethical and compliance regulations at two different institutions with complete impunity. I sincerely hope that your post is wrong and that this is not related to the researcher's political leanings (e.g., left is always fine but right has to actually follow the rules), but in the absence of any action by the author, the institutions, the societies, or the journals, it is difficult to conclude otherwise. I don't think the scientific community as a whole realizes that this could literally be the first story in future history books in the chapter describing the demise of science in the 21st century.

Reply

Joe Duarte

1/9/2015 10:25:44 am

Stephen, thank you for your comment. It's a gust of fresh air and sanity in this troubling situation, and it's exactly how I'd expect any credible scientist to feel.

Reply

Barry Woods

1/11/2015 03:18:27 am

very true - University of Western Australia gave Prof Lewandowsky, Cook and Marriot a clean ethics bill of health for the Recursive Fury paper - Frontiers in Psychology. With UWA investigating the failings of it's own ethics department.. 1/11/2015 11:09:20 pm

Joe, 1/11/2015 01:26:11 am

RomanM

Reply

nightspore

1/11/2015 04:31:05 am

Does anyone know whether Elizabeth Loftus has expressed any misgivings about having appeared on the masthead with this guy? (I'm thinking of the "Subterranean war on science" paper.)

Reply

RomanM

1/11/2015 11:11:03 pm

chrisB, there is nothing strange about the distribution of the last digit of the age data.

Reply

ChrisB

1/12/2015 02:57:25 am

I was wondering if you had applied the Yates's correction for continuity. As such I get a p<0.005

Reply

RomanM

1/12/2015 03:39:15 am

Yates correction is used in 2x2 contingency tables or in a 2x1 goodness-of-fit test for a simple binomial. The net effect of applying the correction is to reduce the magnitude of the chi-square statistic and correspondingly increase the p-value. It would not be used here with a multinomial distribution with 10 categories. 1/12/2015 02:48:18 am

"Throughout this whole saga, it was laypeople who upheld basic scientific, ethical, and epistemic standards."

Reply

1/12/2015 07:32:30 pm

Paul: Not sure we'd need much extra research here. The result is codified as "extraordinary claims need extraordinary evidence" which implies, of course, that we lower our guard when finding what we had expected to find.

Reply

Brandon Shollenberger

1/12/2015 03:05:47 am

I've noticed a couple people above are talking about suspicious responses where people give the same answer(s) to every question. That reminds me of an issue I brought up in my comment which first pointed out the problematic age values in this data set.

Reply

1/13/2015 05:55:12 am

Brandon

Reply

srp

1/13/2015 09:08:13 am

I am not a psychologist, but I believe there is a lot of empirical work backing up the practice of asking subjects the same question different ways, e.g. in things like the MMPI. In the case of the questions Brandon cited, though, these are NOT the same thing--each is a different aspect of a chain of arguments. So the Chambers critique would not apply even were it valid.

Dan

1/15/2015 02:25:05 am

I can think of two possible explanations for why someone may be unsure about CO2 being the diver of climate change, yet be convinced that humans cause global warming.

Reply

Mark Blumler

1/13/2015 12:03:30 am

Really interesting and informed discussion! I only wish to emphasize that it is human nature to make errors that skew the data so as to conform with one's beliefs. Unless we clearly distinguish that from fraud, friends of the researcher in question will be reluctant to point out errors, once they are published. And yes, Lewandosky does seem to be a fraud.

Reply

1/13/2015 05:24:31 pm

Srp

Reply

TinyCO2

1/15/2015 04:10:55 am

I think that questionnaires about climate change are a minefield for unreliable answers. People just don’t know enough about the subject and that very much includes Dr Lewandowsky. The questions tend to be lacking in the subtlety of the issues. I’m aware that when I’ve filled out climate questionnaires I know my answers paint me as a warmist. In reality I don’t even consider myself a lukewarmer since that suggests I don’t have much of a protest against the science and its solutions. If a question is worded badly my answers may appear conflicted. Questions that change direction of positive to negative are easy to miss when people are in a hurry or confused, so again they may make the wrong answer. Words like ‘significant’ mean very different things to different people. Most members of the public would not class a few points of a degree warming as significant but may be sure that hurricanes and tornados have increased significantly. Both views would be wrong. Many are unaware where movie hype stops and science begins. Their responses are not a measure of educated opinion, merely climate change advertising.

Reply

RO

1/14/2015 12:04:43 am

Geoff, slight changes to your interesting post above:

Reply

1/15/2015 11:25:06 pm

There is now a brief Corrigendum posted at the PlosOne comment site

Reply

John M

1/16/2015 06:41:49 am

"In fact, I wasn't the first person to point any of the major issues with Lewandowsky's recent publications. It was people outside of the field – laypeople, bloggers and the like, who discovered the lack of moon hoax believers in the moon hoax paper, who pointed out that the participants were recruited from leftist blogs, that they could be anyone from any country of any age, none of which has been disclosed, etc. Throughout this whole saga, it was laypeople who upheld basic scientific, ethical, and epistemic standards. The scientists, scholars, editors, and authorities have been silent or complicit in the malicious smearing of climate skeptics, free marketeers, and other insignificant victims. I was late to the party."

Reply

John M

1/17/2015 05:46:46 am

There is apparently another guy named Duarte who is making himself a thorn in the side of those trying to create alarm over climate change on the opposite side of the planet, via Watts Up With That:

Reply

johanna

1/17/2015 11:48:14 am

Geoff Chambers, thanks for your comment. But for me it is like the Curate's Egg.

Reply

1/17/2015 09:29:12 pm

Johanna

Reply

Leave a Reply. |

José L. DuarteSocial Psychology, Scientific Validity, and Research Methods. Archives

February 2019

Categories |

RSS Feed

RSS Feed